Zep Memory

Feature Title: Zep Memory - Open Source

Overview

Zep Memory is a powerful long-term memory store for LLM applications in AnswerAI. It stores, summarizes, embeds, indexes, and enriches chatbot histories, making them accessible through simple, low-latency APIs. This feature allows your AnswerAI workflows to maintain context and remember previous conversations efficiently.

Key Benefits

- Long-term memory storage for chatbots and LLM applications

- Efficient summarization and indexing of conversation histories

- Easy integration with AnswerAI workflows

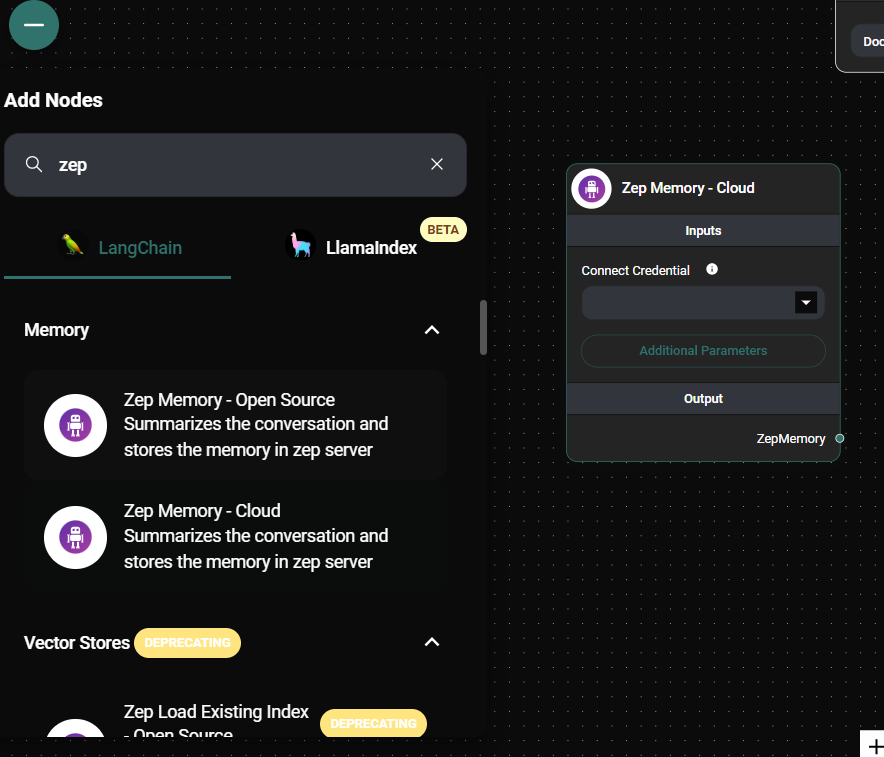

How to Use (Zep Cloud Node)

- Add the Zep Memory - Cloud node to your AnswerAI workflow canvas.

- Configure the node settings:

- Connect your Zep Memory API credential (optional)

- Set a Session ID (optional)

- Choose the Memory Type (perpetual or message_window)

- Customize prefixes and keys as needed

Zep Memory Cloud Node Configuration Panel & Drop UI

- Connect the Zep Memory - Cloud node to your conversation flow.

- Run your workflow to utilize Zep's cloud-based memory storage.

Tips and Best Practices

- Use a consistent Session ID for related conversations to maintain context over time.

- Choose the appropriate Memory Type based on your use case:

- "perpetual" for ongoing, long-term conversations

- "message_window" for conversations with a limited context window

- Customize the AI and Human prefixes to match your conversation style.

- Utilize the Memory Key, Input Key, and Output Key to organize your data effectively.

Troubleshooting

- Authentication Issues: Ensure your Zep Memory API credential is correctly configured in AnswerAI.

- Missing Context: Verify that the Session ID is consistent across related conversations.

- Unexpected Behavior: Double-check the Memory Type setting to ensure it aligns with your intended use case.

Advanced Configuration

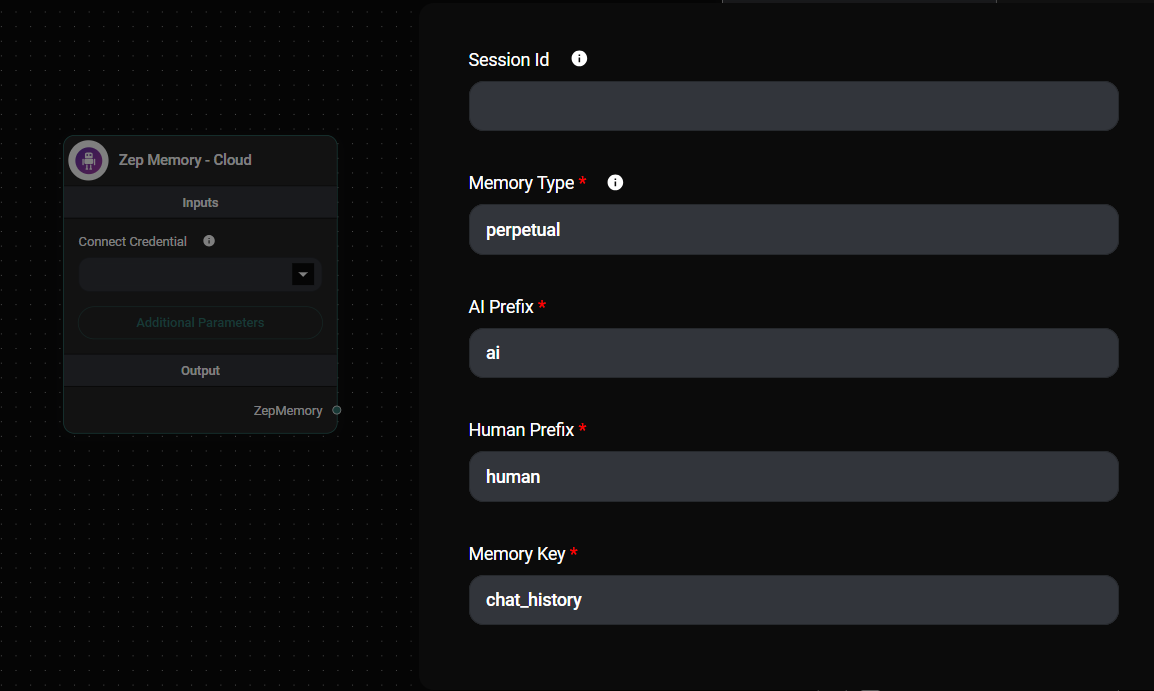

The Zep Memory - Cloud node offers several advanced configuration options:

- Session ID: A unique identifier for the conversation. If not specified, a random ID will be generated.

- Memory Type: Choose between "perpetual" (default) or "message_window" to control how memory is managed.

- AI Prefix: The prefix used to identify AI-generated messages (default: "ai").

- Human Prefix: The prefix used to identify human-generated messages (default: "human").

- Memory Key: The key used to store and retrieve memory data (default: "chat_history").

- Input Key: The key used for input data (default: "input").

- Output Key: The key used for output data (default: "text").

Zep Memory Cloud Node Configuration Panel & Drop UI

By leveraging these options, you can fine-tune the Zep Memory - Cloud node to best suit your specific use case and integration requirements.

How to Use (Custom Server)

- Deploy a Zep server (see deployment guides below)

- In your AnswerAI canvas, add the Zep Memory node to your workflow

- Configure the Zep Memory node with your Zep server's base URL

- Connect the Zep Memory node to your conversation flow

- Save and run your workflow

Configuring the Zep Memory Node

- Base URL: Enter the URL of your deployed Zep server (e.g.,

http://127.0.0.1:8000) - Session ID: (Optional) Specify a custom session ID or leave blank for a random ID

- Size: Set the number of recent messages to use as context (default: 10)

- AI Prefix: Set the prefix for AI messages (default: 'ai')

- Human Prefix: Set the prefix for human messages (default: 'human')

- Memory Key: Set the key for storing memory (default: 'chat_history')

- Input Key: Set the key for input values (default: 'input')

- Output Key: Set the key for output values (default: 'text')

Tips and Best Practices

- Use a consistent Session ID for related conversations to maintain context across multiple interactions

- Adjust the Size parameter based on your application's needs for balancing context and performance

- Regularly monitor your Zep server's performance and scale as needed

- Implement proper security measures, including JWT authentication, for production deployments

Troubleshooting

- Connection issues: Ensure your Zep server is running and accessible from your AnswerAI instance

- Memory not persisting: Verify that the Session ID is consistent across interactions

- Slow performance: Consider adjusting the Size parameter or scaling your Zep server

Deployment Guides (Custom Server)

Deploying Zep to Render

- Go to the Zep GitHub repository

- Click the "Deploy to Render" button

- On Render's Blueprint page, click "Create New Resources"

- Wait for the deployment to complete

- Copy the deployed URL from the "zep" application in your Render dashboard

Deploying Zep to Digital Ocean (via Docker)

-

Clone the Zep repository:

git clone https://github.com/getzep/zep.git

cd zep -

Create and edit the

.envfile:nano .env -

Add your OpenAI API Key to the

.envfile:ZEP_OPENAI_API_KEY=your_api_key_here -

Build and run the Docker container:

docker compose up -d --build -

Allow firewall access to port 8000:

sudo ufw allow from any to any port 8000 proto tcp

ufw status numberedNote: If using Digital Ocean's dashboard firewall, ensure port 8000 is added there as well.

Zep Authentication

To secure your Zep instance using JWT authentication:

-

Download the

zepcliutility from the releases page -

Generate a secret and JWT token:

- On Linux or macOS:

./zepcli -i - On Windows:

zepcli.exe -i

- On Linux or macOS:

-

Configure auth environment variables on your Zep server:

ZEP_AUTH_REQUIRED=true

ZEP_AUTH_SECRET=<the secret you generated> -

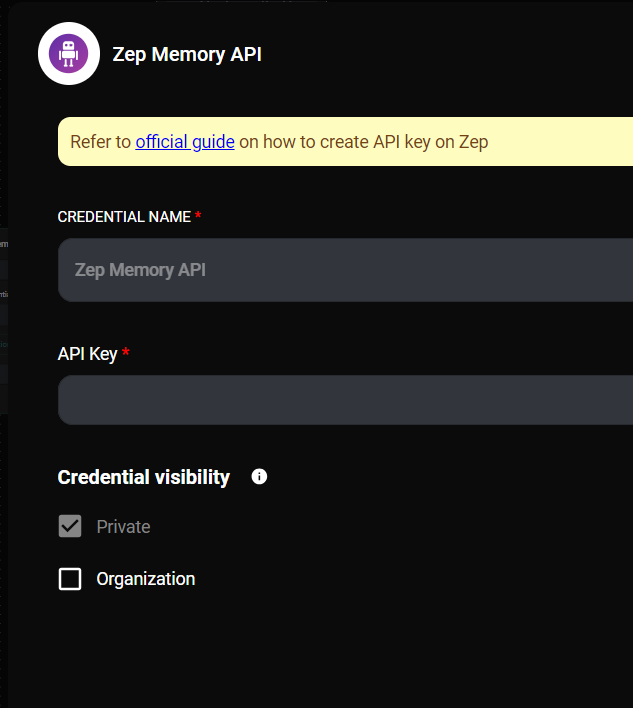

In AnswerAI, create a new credential for Zep:

- Add a new credential

- Enter the JWT Token in the API Key field

-

Use the created credential in the Zep Memory node:

- Select the credential in the "Connect Credential" field of the Zep Memory node

Zep Memory Node Credential & Drop UI

By following these steps, you'll have a secure, authenticated connection between your AnswerAI workflow and your Zep memory server.