Conversation Summary Buffer Memory

Overview

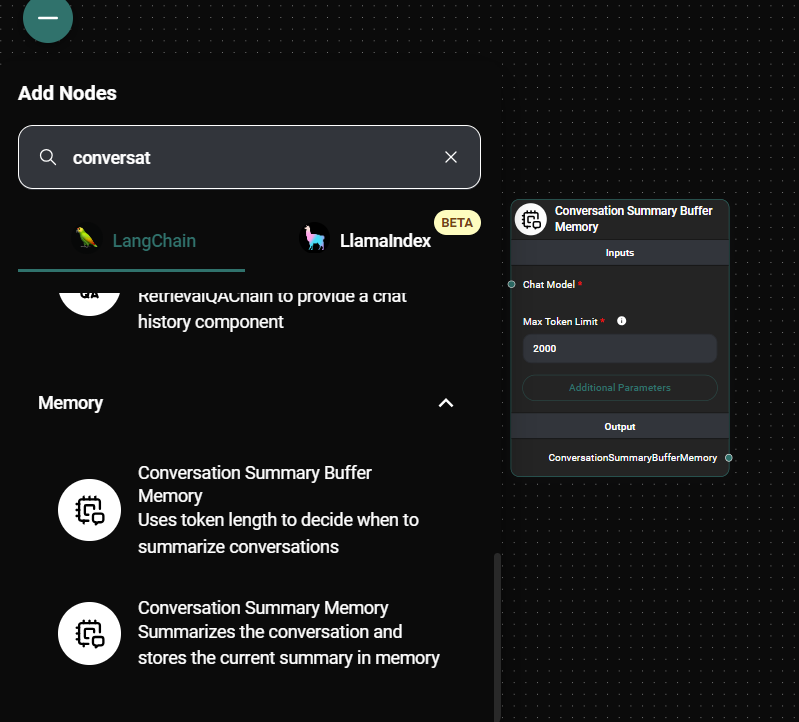

The Conversation Summary Buffer Memory is a powerful feature in AnswerAI that uses token length to decide when to summarize conversations. This memory type helps manage long conversations efficiently by summarizing older parts of the conversation when a token limit is reached.

Key Benefits

- Maintains context for long conversations without exceeding token limits

- Automatically summarizes older parts of the conversation

- Allows customization of token limits and memory settings

How to Use

-

Add the Conversation Summary Buffer Memory node to your AnswerAI workflow canvas.

-

Configure the node with the following settings:

a. Chat Model: Select the language model to use for summarization.

b. Max Token Limit: Set the maximum number of tokens before summarization occurs (default is 2000).

c. Session ID (optional): Specify a unique identifier for the conversation. If not provided, a random ID will be generated.

d. Memory Key (optional): Set the key used to store the chat history (default is 'chat_history').

-

Connect the memory node to other nodes in your workflow that require conversation history.

Conversation Summary Buffer Memory Node in a workflow& Drop UI

Tips and Best Practices

- Choose an appropriate Max Token Limit based on your use case and the complexity of your conversations.

- Use a consistent Session ID for related conversations to maintain context across multiple interactions.

- Experiment with different Chat Models to find the best balance between summarization quality and performance.

- Monitor the summarized content to ensure important information is not lost during the summarization process.

Troubleshooting

-

Issue: Conversation context seems to be lost unexpectedly. Solution: Check if the Max Token Limit is set too low. Increase the limit to allow for more context retention.

-

Issue: Summarization is not occurring as expected. Solution: Verify that the Chat Model is properly connected and functioning. Ensure that the conversation length is actually reaching the Max Token Limit.

-

Issue: Memory is not persisting across sessions. Solution: Make sure you're using a consistent Session ID for related conversations. If the issue persists, check your database configuration and connections.

Remember to handle the memory operations carefully, especially when dealing with sensitive conversation data.