OpenAI Embeddings Custom

Overview

The OpenAI Embeddings Custom feature in AnswerAI allows you to generate customized embeddings for your text using OpenAI's powerful API. This feature provides flexibility in creating embeddings tailored to your specific needs, enabling more accurate and relevant text representations for various natural language processing tasks.

Key Benefits

- Customizable embeddings for improved text representation

- Fine-tuned control over embedding generation parameters

- Seamless integration with OpenAI's state-of-the-art language models

How to Use

- Navigate to the Embeddings section in AnswerAI.

- Select "OpenAI Embeddings Custom" from the available options.

- Connect your OpenAI API credential.

- Configure the embedding parameters:

- Strip New Lines: Choose whether to remove newline characters from the input text.

- Batch Size: Set the number of text chunks to process in a single API call.

- Timeout: Specify the maximum time (in milliseconds) to wait for the API response.

- Base Path: Enter a custom base path for the API endpoint, if necessary.

- Model Name: Select the OpenAI model to use for generating embeddings.

- Dimensions: Specify the number of dimensions for the output embeddings.

- Input your text data for embedding generation.

- Run the embedding process to obtain your custom embeddings.

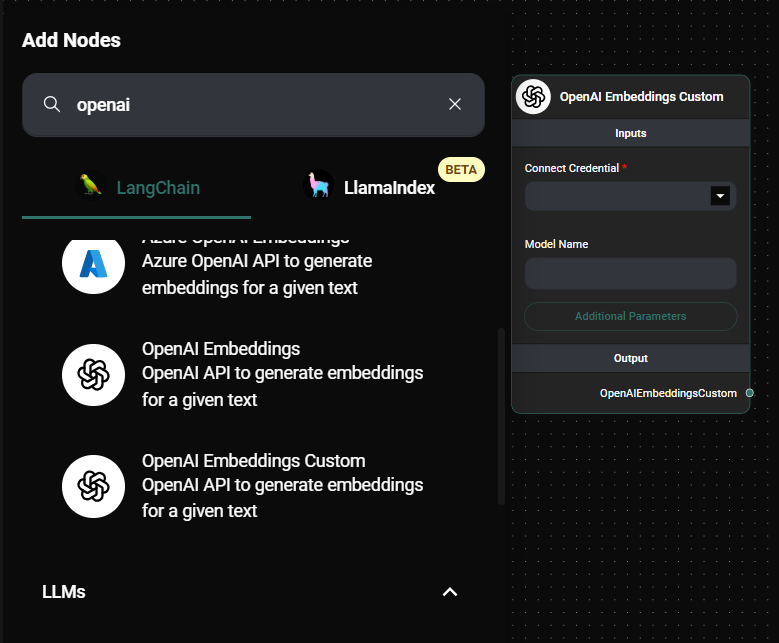

OpenAI Embeddings Custom Node & Drop UI

Tips and Best Practices

- Experiment with different model names to find the best fit for your specific use case.

- Adjust the number of dimensions to balance between embedding richness and computational efficiency.

- Use batch processing for large datasets to optimize API usage and improve performance.

- Consider stripping new lines for texts where line breaks are not semantically important.

Customizing Embeddings

AnswerAI's OpenAI Embeddings Custom feature allows you to create tailored embeddings, similar to the process described in the OpenAI Cookbook. Here's how you can leverage this functionality:

-

Model Selection: Choose from various OpenAI models to generate embeddings that best suit your needs. Different models may produce embeddings with varying characteristics.

-

Dimensionality: Adjust the number of dimensions in your embeddings. Higher dimensions can capture more nuanced relationships but may increase computational costs.

-

Input Preprocessing: Use the "Strip New Lines" option to clean your input text, ensuring consistent embedding generation across different text formats.

-

Fine-tuning for Specific Tasks: By carefully selecting your input text and model parameters, you can create embeddings that are optimized for specific tasks such as semantic search, text classification, or content recommendation.

-

API Customization: Utilize the "Base Path" option to point to a specific API endpoint, allowing for potential use of fine-tuned models or custom deployment scenarios.

Troubleshooting

- API Key Issues: Ensure your OpenAI API key is correctly entered in the credential settings.

- Timeout Errors: If you encounter timeout errors, try increasing the timeout value or reducing the batch size.

- Dimension Mismatch: Make sure the specified number of dimensions is supported by the chosen model.

- Rate Limiting: If you hit API rate limits, consider adjusting your batch size or implementing a backoff strategy in your workflows.

By leveraging the OpenAI Embeddings Custom feature in AnswerAI, you can create powerful, tailored text representations that enhance the performance of your natural language processing tasks.