OpenAI Embeddings

Overview

OpenAI Embeddings is a powerful feature in AnswerAI that generates vector representations (embeddings) of text. These embeddings are crucial for various natural language processing tasks, including semantic search, text classification, and content recommendation.

Key Benefits

- High-quality text representations for improved language understanding

- Seamless integration with other AnswerAI features

- Versatile applications across various NLP tasks

How to Use

- In your AnswerAI workflow, locate the Embeddings section.

- Select "OpenAI Embeddings" from the available options.

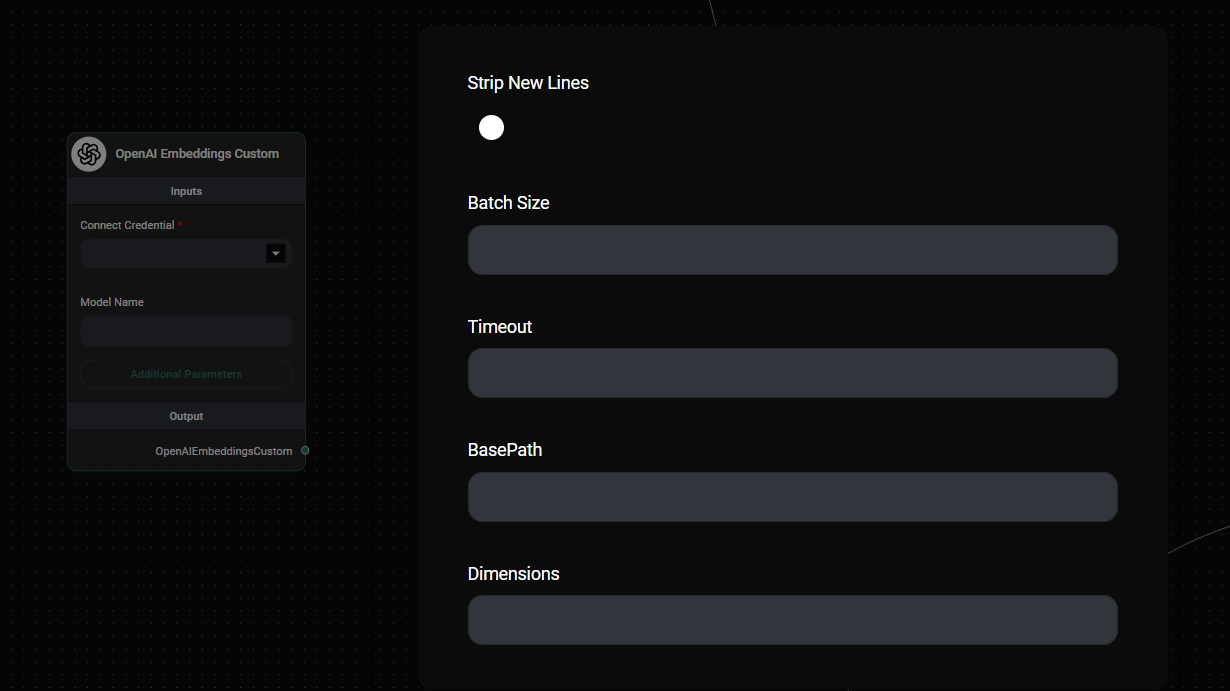

- Configure the embedding parameters:

- Model Name: Choose the desired OpenAI embedding model (default is "text-embedding-ada-002").

- Strip New Lines: Enable to remove newline characters from the input text.

- Batch Size: Set the number of texts to process in a single API call.

- Timeout: Specify the maximum time (in milliseconds) to wait for the API response.

- BasePath: Enter a custom API endpoint if needed.

- Dimensions: Specify the desired embedding dimensions (if supported by the chosen model).

- Connect your OpenAI API credentials.

- Save and run your workflow.

OpenAI Embeddings Configuration Panel & Drop UI

Tips and Best Practices

-

Default Option: OpenAI Embeddings is often the default choice for many sidekick workflows in AnswerAI due to its high quality and versatility.

-

Experiment with Alternatives: While OpenAI Embeddings is a solid choice, consider experimenting with different embedding models for your specific use case. AnswerAI offers various embedding options that may perform better for certain tasks or domains.

-

Model Selection: The "text-embedding-ada-002" model is a good starting point, but be aware of newer models that OpenAI may release, as they could offer improved performance.

-

Optimize Batch Size: Adjust the batch size to balance between processing speed and API usage. Larger batch sizes can be more efficient but may increase latency.

-

Monitor Token Usage: Keep an eye on your OpenAI API token usage, especially when processing large volumes of text.

Troubleshooting

-

API Key Issues: Ensure your OpenAI API key is correctly configured in your AnswerAI credentials.

-

Rate Limiting: If you encounter rate limit errors, try reducing the batch size or implementing a retry mechanism with exponential backoff.

-

Timeout Errors: For large texts or slow connections, increase the timeout value to allow more time for the API to respond.

-

Unsupported Models: If you receive an error about an unsupported model, check the OpenAI API documentation for the latest available embedding models.

Remember, while OpenAI Embeddings is an excellent default choice, the best embedding model for your project may vary depending on your specific requirements, data characteristics, and performance needs. Don't hesitate to experiment with different embedding options available in AnswerAI to find the optimal solution for your use case.