MistralAI Embeddings

Overview

MistralAI Embeddings is a powerful feature in AnswerAI that allows you to generate vector representations (embeddings) of text using the MistralAI API. These embeddings can be used for various natural language processing tasks, such as semantic search, text classification, and more.

Key Benefits

- High-quality embeddings: Leverage MistralAI's advanced language models for accurate text representations.

- Customizable: Adjust parameters like batch size and model selection to suit your specific needs.

- Efficient processing: Optimize your workflow with batch processing and optional performance tweaks.

How to Use

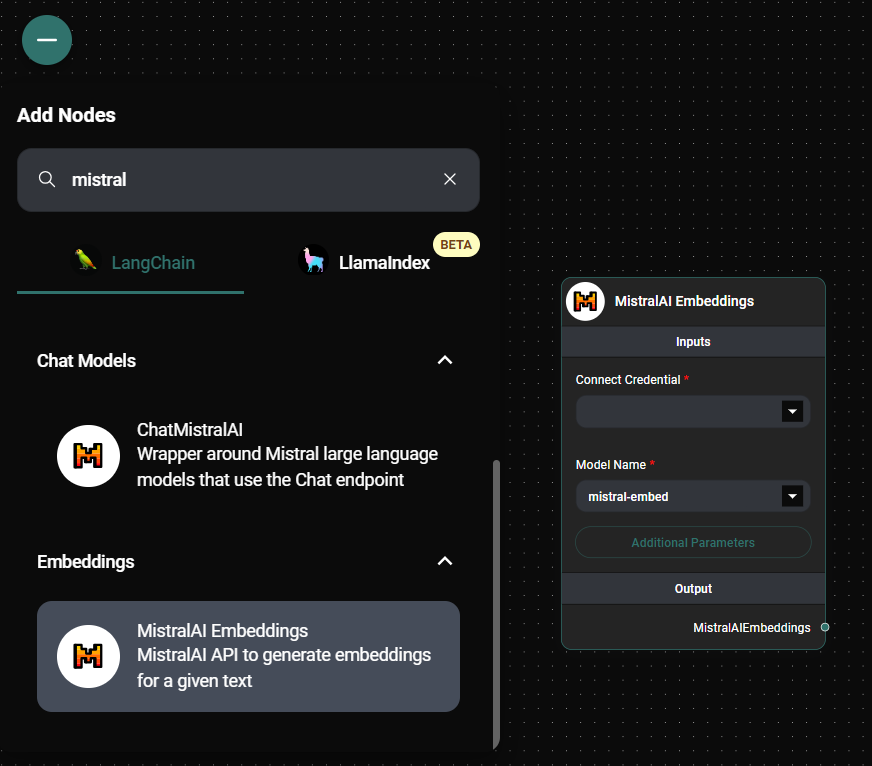

- Add the MistralAI Embeddings node to your AnswerAI workflow.

MistralAI Embeddings Node & Drop UI

- Configure the node settings: a. Connect your MistralAI API credential. b. Select the desired model from the "Model Name" dropdown. c. (Optional) Adjust additional parameters like Batch Size and Strip New Lines.

MistralAI Embeddings Node Configuration & Drop UI

-

Connect the MistralAI Embeddings node to your input text source and output destination.

-

Run your workflow to generate embeddings for your text data.

Tips and Best Practices

-

Choose the appropriate model: Select the model that best fits your use case and performance requirements.

-

Optimize batch size: Adjust the batch size to balance between processing speed and memory usage.

-

Consider stripping new lines: Enable the "Strip New Lines" option to remove unnecessary whitespace, which can improve embedding quality for certain types of text.

-

Use embeddings effectively: Incorporate the generated embeddings into downstream tasks like similarity search, clustering, or as input features for machine learning models.

Troubleshooting

-

API Key Issues:

- Ensure that you have entered the correct MistralAI API key in the credential settings.

- Verify that your API key has the necessary permissions to access the embedding models.

-

Model Availability:

- If a specific model is not available, try refreshing the model list or check the MistralAI documentation for any recent changes.

-

Performance Concerns:

- If processing is slow, try reducing the batch size or using a smaller, faster model.

- For large-scale processing, consider using the override endpoint option to connect to a dedicated MistralAI instance.

-

Embedding Quality:

- If the embeddings are not producing expected results, experiment with different models or adjust the input text preprocessing (e.g., try with and without stripping new lines).

Remember to respect MistralAI's usage policies and rate limits when using this feature. For any persistent issues, consult the AnswerAI support documentation or contact our support team.