HuggingFace Inference Embeddings

Overview

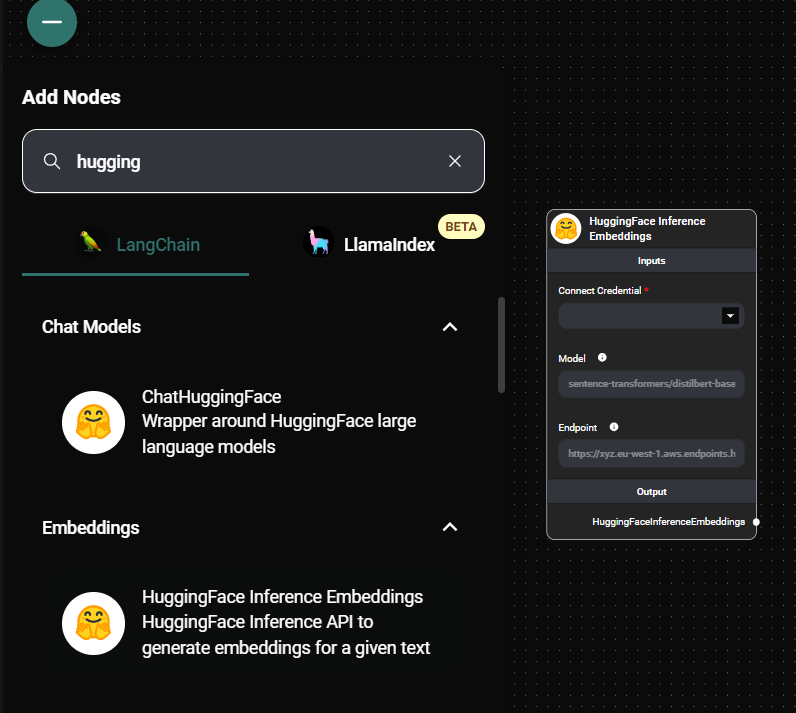

The HuggingFace Inference Embeddings feature in AnswerAI allows you to generate embeddings for a given text using the HuggingFace Inference API. Embeddings are numerical representations of text that capture semantic meaning, which can be useful for various natural language processing tasks.

Key Benefits

- Access to state-of-the-art embedding models from HuggingFace

- Flexibility to use pre-defined models or your own custom inference endpoint

- Easy integration with other AnswerAI components for advanced NLP workflows

How to Use

-

Connect your HuggingFace API Credential:

- Click on the "Connect Credential" option

- Select or add your HuggingFace API credentials

-

Configure the Embedding Settings:

-

Model (Optional): Enter the name of the HuggingFace model you want to use for generating embeddings. If left blank, a default model will be used.

- Example:

sentence-transformers/distilbert-base-nli-mean-tokens

- Example:

-

Endpoint (Optional): If you're using your own inference endpoint, enter the URL here. Leave this blank if you're using a standard HuggingFace model.

- Example:

https://xyz.eu-west-1.aws.endpoints.huggingface.cloud/sentence-transformers/all-MiniLM-L6-v2

- Example:

-

-

Connect the HuggingFace Inference Embeddings node to other components in your AnswerAI workflow that require text embeddings.

HuggingFace Embeddings Node & Drop UI

Tips and Best Practices

-

Choose the right model: Different embedding models are optimized for different tasks. Research and select a model that best fits your specific use case.

-

Custom endpoints: If you have fine-tuned your own embedding model, you can deploy it to a custom endpoint and use it with this feature by providing the endpoint URL.

-

API usage: Be mindful of your API usage, especially if you're processing large volumes of text. Check your HuggingFace account for usage limits and pricing details.

-

Caching: Consider implementing caching mechanisms for frequently used embeddings to reduce API calls and improve performance.

Troubleshooting

-

Authentication errors:

- Ensure that you have entered the correct HuggingFace API key in your credentials.

- Verify that your API key has the necessary permissions to access the Inference API.

-

Model not found:

- Double-check the model name for any typos.

- Ensure that the model you're trying to use is available through the HuggingFace Inference API.

-

Endpoint connection issues:

- If using a custom endpoint, verify that the URL is correct and the endpoint is accessible.

- Check your network connection and firewall settings.

-

Unexpected results:

- Verify that the input text is in the correct format expected by the model.

- Try using a different model to see if the issue persists.

For any persistent issues, consult the AnswerAI documentation or reach out to our support team for assistance.