ChatOpenAI

Overview

ChatOpenAI is a powerful wrapper around OpenAI's large language models that use the Chat endpoint. It serves as the default chat model for most workflows in AnswerAI, providing a seamless integration with OpenAI's GPT models.

Key Benefits

- Easy integration with OpenAI's most advanced language models

- Customizable parameters for fine-tuned responses

- Support for image uploads and multi-modal interactions

How to Use

-

In the AnswerAI interface, locate the "ChatOpenAI" node in the "Chat Models" category.

-

Drag and drop the ChatOpenAI node into your workflow.

-

Connect your OpenAI API credentials:

- Click on the node to open its settings.

- Under "Connect Credential", select or add your OpenAI API key.

-

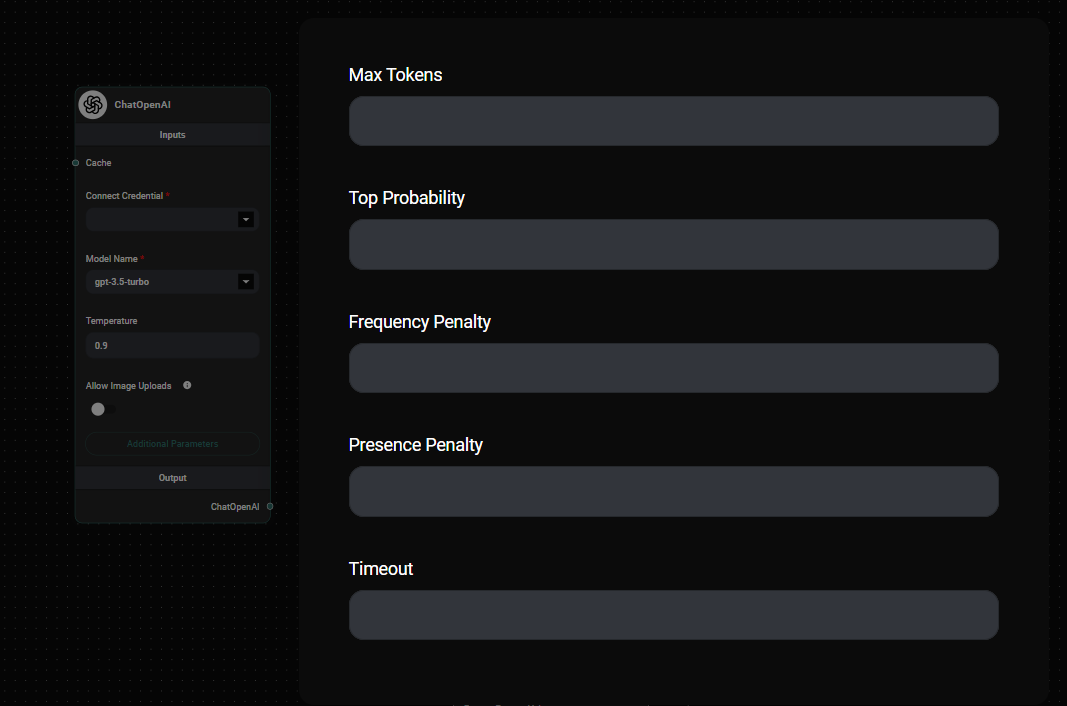

Configure the model parameters:

- Choose a Model Name (default is "gpt-3.5-turbo")

- Adjust Temperature, Max Tokens, and other parameters as needed

- Enable or disable image uploads and set image resolution if required

-

Connect the ChatOpenAI node to other nodes in your workflow to utilize its capabilities.

ChatOpenAI Node Configuration & Drop UI

Tips and Best Practices

-

Experiment with different models: While ChatOpenAI with gpt-3.5-turbo is the default, don't hesitate to try other models. Some may be better suited for your specific use case and could potentially be more cost-effective.

-

Optimize temperature: A lower temperature (closer to 0) will make responses more deterministic, while a higher value (closer to 1) will make them more creative and diverse.

-

Use max tokens wisely: Set an appropriate max token limit to control response length and manage costs.

-

Leverage image capabilities: If your use case involves visual content, enable the "Allow Image Uploads" option to utilize multi-modal features.

-

Monitor and adjust: Keep an eye on your model's performance and costs. Adjust parameters or switch models if needed to optimize your workflow.

Troubleshooting

-

API Key Issues:

- Ensure your OpenAI API key is correctly entered and has sufficient credits.

- Check if you have access to the selected model (some models may require special access).

-

Unexpected Responses:

- Review and adjust the temperature and other parameters.

- Ensure your prompts are clear and well-structured.

-

Token Limit Errors:

- Increase the Max Tokens parameter or reduce the input length.

- Consider using a model with a higher token limit if consistently hitting limits.

-

Performance Issues:

- If responses are slow, check your internet connection and consider using a model with lower latency.

- Adjust the timeout parameter if needed.

Remember, while ChatOpenAI is a great starting point, AnswerAI offers a variety of other models that might better suit your specific needs. Don't hesitate to experiment with different options to find the perfect balance of performance, cost, and capabilities for your project.