ChatHuggingFace

Overview

ChatHuggingFace is a powerful feature in AnswerAI that allows you to interact with Hugging Face's large language models. This feature provides a user-friendly interface to leverage state-of-the-art natural language processing capabilities for various tasks such as text generation, question-answering, and more.

Key Benefits

- Access to a wide range of pre-trained language models

- Customizable parameters for fine-tuned outputs

- Option to use your own inference endpoint for specialized needs

How to Use

-

Navigate to the ChatHuggingFace node in the AnswerAI interface.

-

Configure the following settings:

a. Connect Credential: Link your Hugging Face API credentials.

b. Model: Enter the name of the Hugging Face model you want to use (e.g., "gpt2"). Leave this blank if using your own inference endpoint.

c. Endpoint (Optional): If you're using your own inference endpoint, enter the URL here.

d. Additional Parameters (Optional):

- Temperature

- Max Tokens

- Top Probability

- Top K

- Frequency Penalty

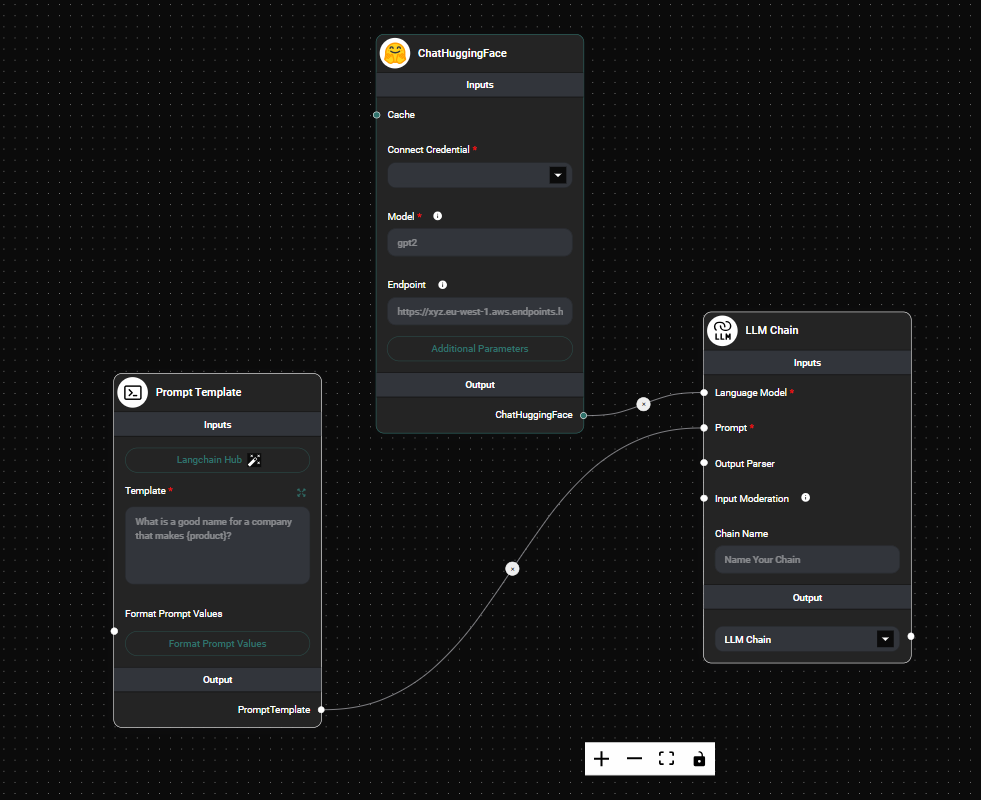

ChatHugging Face Node Configuration & Drop UI

-

Connect the ChatHuggingFace node to other nodes in your AnswerAI workflow.

-

Run your workflow to interact with the Hugging Face model.

Tips and Best Practices

-

Model Selection: Choose the appropriate model for your task. Different models excel at different types of language processing tasks.

-

Parameter Tuning: Experiment with different parameter values to achieve the desired output. For example:

- Increase temperature for more creative outputs

- Decrease temperature for more focused and deterministic responses

- Adjust max tokens to control the length of the generated text

-

API Key Security: Always keep your Hugging Face API key secure. Use the credential management system in AnswerAI to safely store and use your API key.

-

Custom Endpoints: If you have specific requirements or want to use a fine-tuned model, consider setting up your own inference endpoint.

Troubleshooting

-

Model Not Found: Ensure that you've entered the correct model name. Check the Hugging Face model hub for available models.

-

API Key Issues: Verify that your Hugging Face API key is correctly entered in the AnswerAI credential manager.

-

Endpoint Errors: If using a custom endpoint, make sure the URL is correct and the endpoint is accessible.

-

Unexpected Outputs: Adjust the model parameters, especially temperature and max tokens, to fine-tune the output quality and length.

-

Rate Limiting: Be aware of the API rate limits for your Hugging Face account. If you're experiencing frequent timeouts, you may need to upgrade your plan or optimize your usage.

Remember that different models may have different available parameters. Always refer to the specific model's documentation on the Hugging Face website for the most accurate information on available settings and best practices.

By leveraging the ChatHuggingFace feature in AnswerAI, you can easily integrate powerful open-sourcelanguage models into your workflows, enabling sophisticated natural language processing capabilities for a wide range of applications.