Redis Cache

Overview

The Redis Cache feature in AnswerAI allows you to store Language Model (LLM) responses using Redis, a high-performance, in-memory data store. This caching mechanism is particularly useful for sharing cache across multiple processes or servers, improving response times, and reducing the load on your LLM service.

Key Benefits

- Faster response times for repeated queries

- Reduced API usage, potentially lowering costs

- Shared cache across multiple processes or servers

- Scalable and high-performance caching solution

- Configurable Time to Live (TTL) for cache entries

How to Use

-

Set up a Redis server:

- Install Redis on your server or use a managed Redis service

- Note down the connection details (host, port, username, password)

-

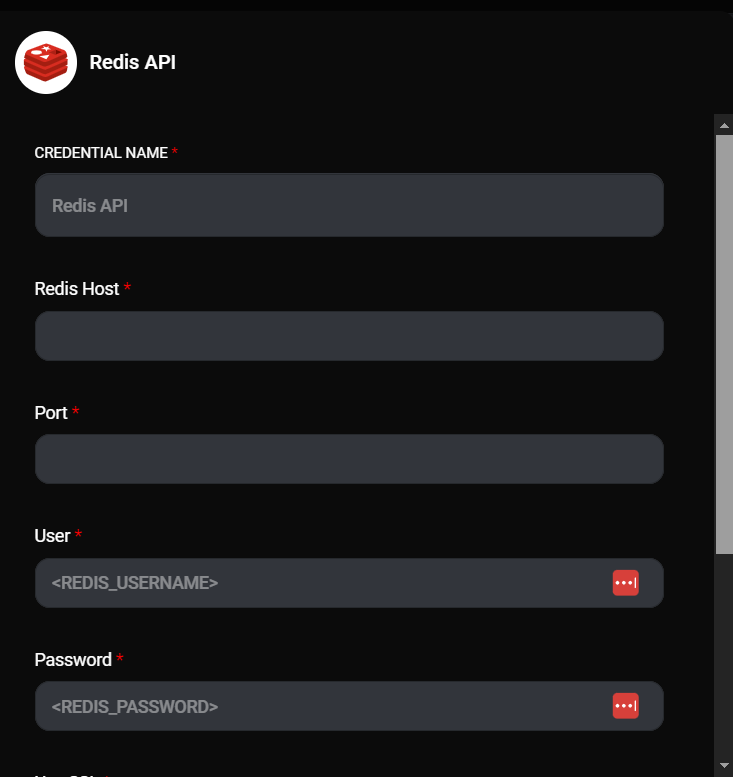

Configure the Redis Cache credential in AnswerAI:

- Navigate to the credentials section in AnswerAI

- Create a new credential of type 'redisCacheApi' or 'redisCacheUrlApi'

- For 'redisCacheApi', enter the Redis host, port, username, and password

- For 'redisCacheUrlApi', enter the Redis connection URL

- If using SSL, enable the SSL option

Redis Cache Credentials & Drop UI

-

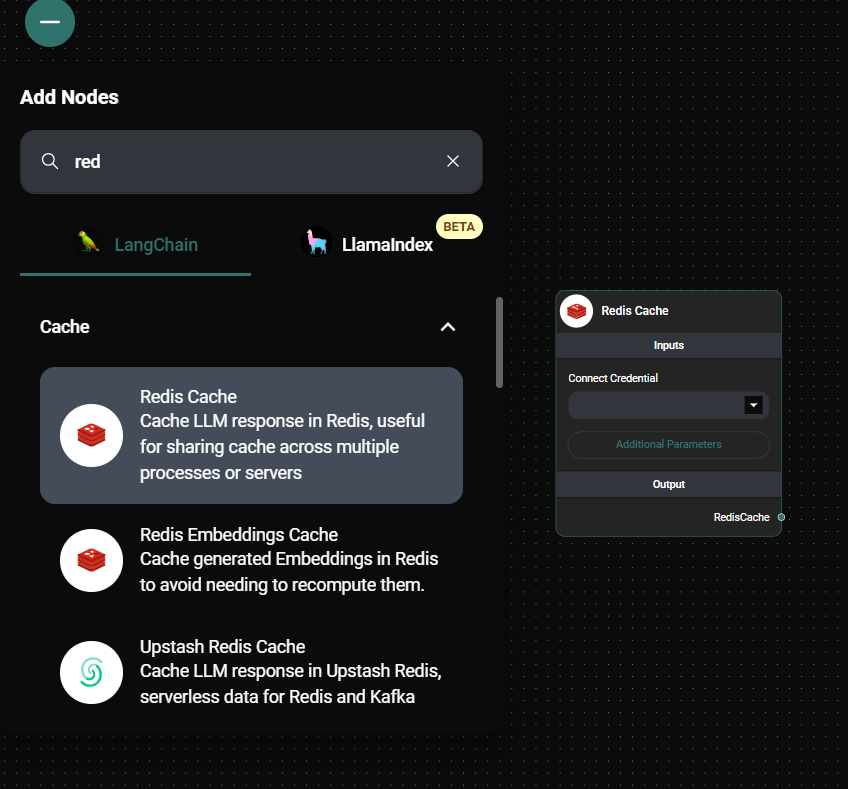

Add the Redis Cache node to your AnswerAI workflow:

Redis Cache Configuration & Drop UI

-

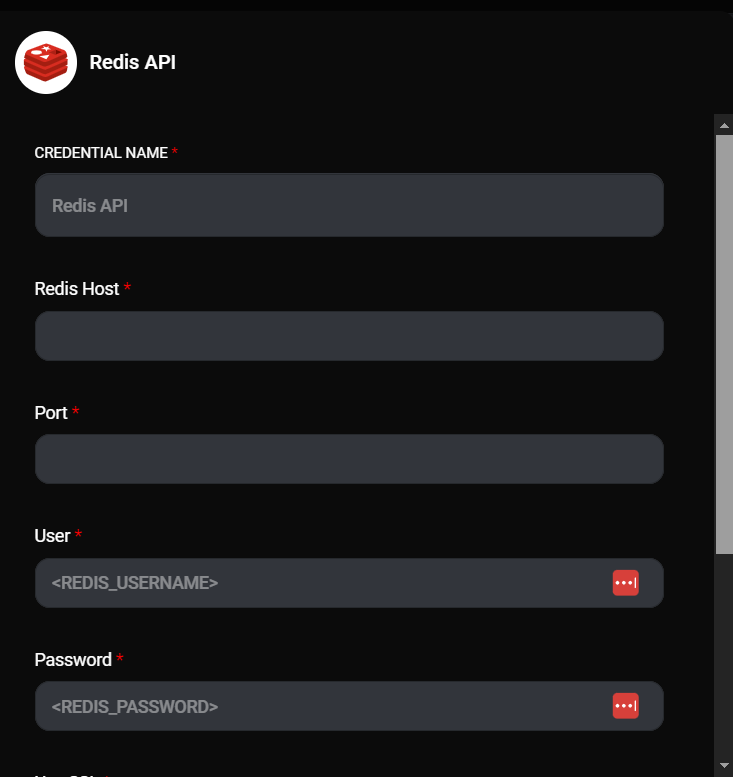

Configure the Redis Cache node:

- Connect the previously created credential to the node

- (Optional) Set the Time to Live (TTL) in milliseconds

Redis API Cache Configuration & Drop UI

-

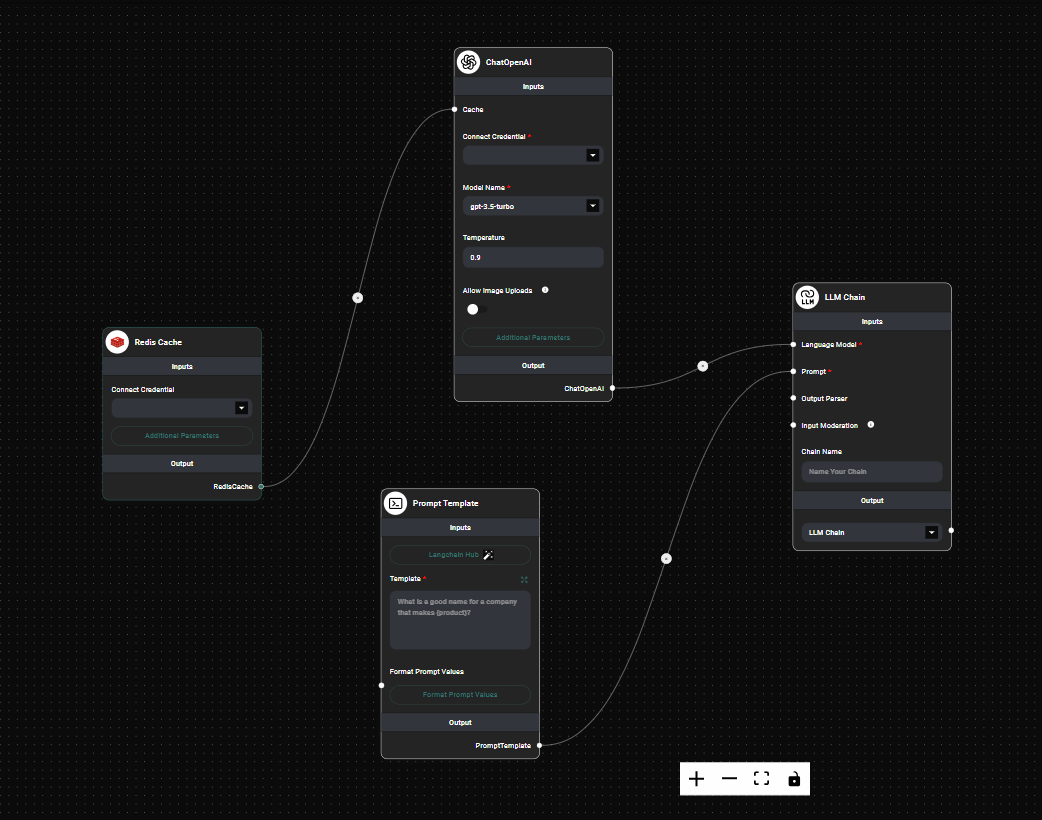

Connect the Redis Cache node to your LLM node:

Redis API Cache In A workflow & Drop UI

-

Run your workflow:

- The first time a unique prompt is processed, the response will be cached in Redis

- Subsequent identical prompts will retrieve the cached response, improving performance

Tips and Best Practices

-

Optimize cache usage:

- Use caching for stable, non-dynamic content

- Ideal for frequently asked questions or standard procedures

- Avoid caching for time-sensitive or rapidly changing information

-

Configure Time to Live (TTL):

- Set an appropriate TTL based on how frequently your data changes

- Shorter TTL for more dynamic content, longer TTL for stable information

- If TTL is not set, cached entries will persist until manually removed

-

Monitor Redis performance:

- Regularly check Redis memory usage and performance metrics

- Implement alerting for Redis server health

-

Secure your Redis instance:

- Use strong passwords and consider using SSL for encrypted connections

- Implement proper network security measures to protect your Redis server

-

Scale your Redis setup:

- For high-traffic applications, consider using Redis clusters or replication

- Implement proper backup and recovery procedures for your Redis data

-

Handle cache misses gracefully:

- Implement fallback mechanisms in case of cache misses or Redis connection issues

Troubleshooting

-

Connection issues:

- Verify that the Redis server is running and accessible

- Check if the credential details (host, port, username, password) are correct

- Ensure that firewalls or network policies allow connections to the Redis server

-

Cache misses or unexpected responses:

- Verify that the Redis Cache node is correctly connected in your workflow

- Check if your prompt includes dynamic elements that might prevent proper caching

- Ensure the TTL is set appropriately for your use case

-

Performance issues:

- Monitor Redis server load and memory usage

- Consider upgrading your Redis server or implementing clustering for better performance

- Optimize your cache key generation if necessary

-

Data consistency issues:

- If you're using multiple Redis instances, ensure they are properly synchronized

- Implement proper error handling for scenarios where cached data might be inconsistent

-

SSL connection problems:

- Verify that SSL is properly configured on both the Redis server and in the AnswerAI credential

- Check SSL certificate validity and expiration

If you encounter any issues not covered here, refer to the Redis documentation or contact AnswerAI support for assistance.

By leveraging the Redis Cache feature, you can significantly enhance the performance, scalability, and efficiency of your AnswerAI workflows, especially for high-traffic applications or scenarios requiring shared caching across multiple processes or servers.