InMemory Cache

Overview

The InMemory Cache feature in AnswerAI allows you to store Language Model (LLM) responses in local memory. This caching mechanism improves performance by reducing the need for repeated API calls for identical prompts.

Key Benefits

- Faster response times for repeated queries

- Reduced API usage, potentially lowering costs

- Improved overall system efficiency

How to Use

-

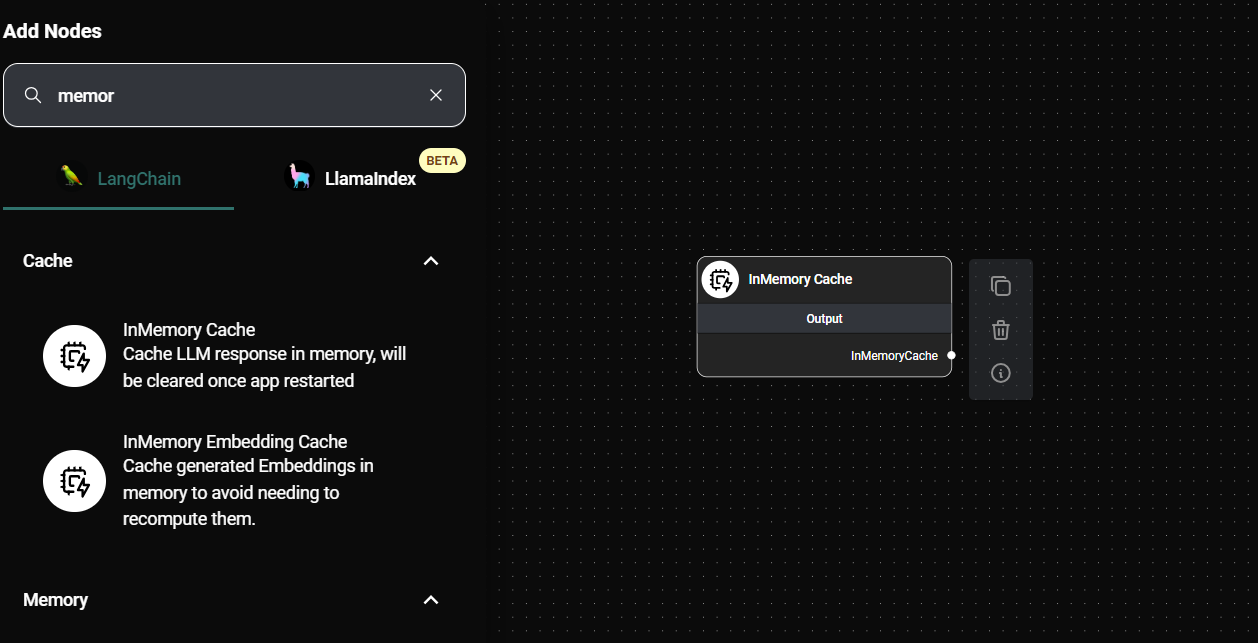

Add the InMemory Cache node to your AnswerAI workflow:

In Memory Cache & Drop UI

-

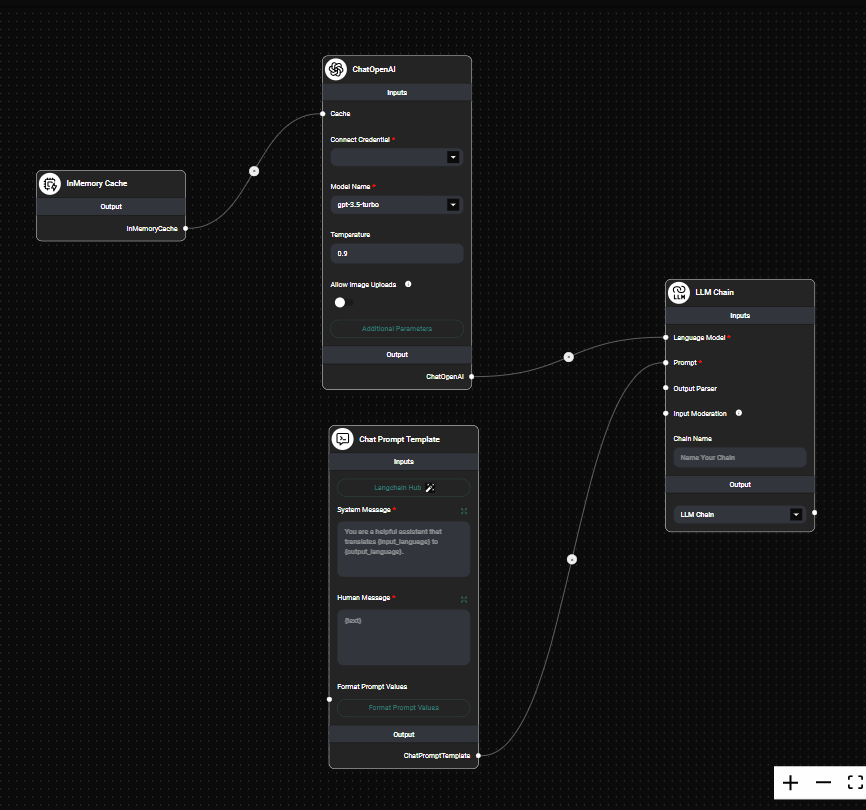

Connect the InMemory Cache node to your LLM node:

Tool Agent & Drop UI

-

Configure your workflow to use the cache:

- No additional configuration is required. The cache will automatically store and retrieve responses.

-

Run your workflow:

- The first time a unique prompt is processed, the response will be cached.

- Subsequent identical prompts will retrieve the cached response, improving performance.

Tips and Best Practices

-

Use caching for stable, non-dynamic content:

- Ideal for frequently asked questions or standard procedures.

- Avoid caching for time-sensitive or rapidly changing information.

-

Monitor cache usage:

- Regularly review which prompts are being cached to ensure relevance.

- Consider clearing the cache periodically if information becomes outdated.

-

Combine with other caching strategies:

- For more persistent caching, consider using the InMemory Cache alongside database caching solutions.

-

Test thoroughly:

- Ensure that caching doesn't negatively impact the accuracy or relevance of your AI responses.

Troubleshooting

-

Cached responses seem outdated:

- Remember that the InMemory Cache is cleared when the AnswerAI app is restarted.

- If you need to update cached responses, restart the application or implement a cache clearing mechanism.

-

No performance improvement observed:

- Verify that the InMemory Cache node is correctly connected in your workflow.

- Ensure that you're testing with repeated, identical prompts to see the caching effect.

-

Unexpected responses:

- Check if your prompt includes dynamic elements (e.g., timestamps, user-specific data) that might prevent proper caching.

- Review your workflow to ensure the cache is being used as intended.

Remember that the InMemory Cache is cleared when the AnswerAI app is restarted. For long-term persistence, consider using a database caching solution in addition to or instead of the InMemory Cache.

By leveraging the InMemory Cache feature, you can significantly enhance the performance and efficiency of your AnswerAI workflows, especially for frequently repeated queries or stable information retrieval tasks.