Custom List Output Parser

Overview

The Custom List Output Parser is a powerful tool in AnswerAI that allows you to parse the output of a Language Model (LLM) call as a list of values. This feature is particularly useful when you need to extract structured information from the LLM's response in a list format.

Key Benefits

- Easily convert unstructured LLM output into a structured list

- Customize the parsing process to fit your specific needs

- Improve the reliability of parsed output with autofix functionality

How to Use

To use the Custom List Output Parser in your AnswerAI workflow:

- Locate the "Custom List Output Parser" node in the node library.

- Drag and drop the node onto your canvas.

- Connect the output of your LLM node to the input of the Custom List Output Parser node.

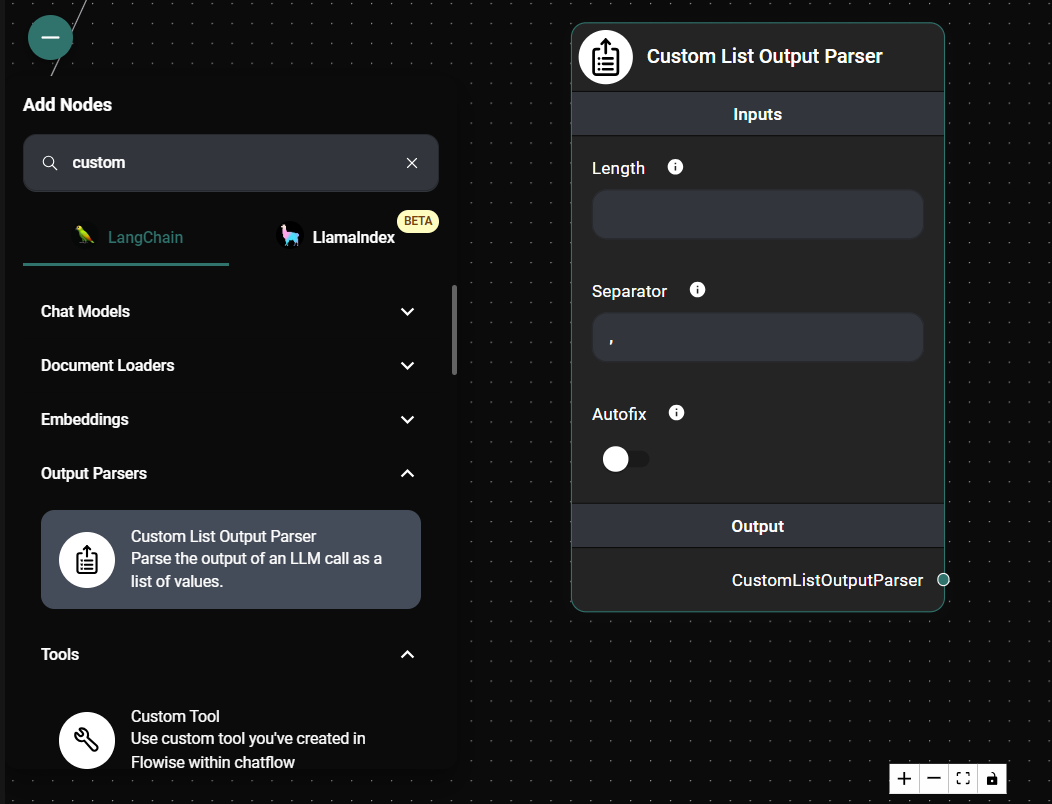

- Configure the node parameters:

- Length: Specify the number of values you want in the output list (optional).

- Separator: Define the character used to separate values in the list (default is ',').

- Autofix: Enable this option to attempt automatic error correction if parsing fails.

- Connect the output of the Custom List Output Parser to your desired destination node.

Custom List Output Parser node configuration panel & Drop UI

Tips and Best Practices

- When setting the "Length" parameter, ensure it matches the expected number of items in your LLM's output for best results.

- Choose a unique separator that is unlikely to appear within the list items themselves to avoid parsing errors.

- Enable the "Autofix" option when dealing with potentially inconsistent LLM outputs to improve reliability.

- Test your parser with various LLM outputs to ensure it handles different scenarios correctly.

Troubleshooting

Common issues and solutions:

-

Incorrect number of items in the output list:

- Verify that the "Length" parameter is set correctly.

- Check if the LLM is consistently producing the expected number of items.

-

Items not separated correctly:

- Ensure the "Separator" parameter matches the separator used in the LLM output.

- Consider using a more unique separator if the current one appears within list items.

-

Parsing errors:

- Enable the "Autofix" option to attempt automatic error correction.

- Review the LLM prompt to ensure it's generating output in the expected format.